Softmax#

In this chapter, we will look a little bit at the theory behind neural networks. The relation between the learning of networks and probability distributions.

import numpy as np

import matplotlib.pyplot as plt

Likelihood#

Neural networks model probability distributions: \(P(y_i | \theta)\). This function calculates the probability to sample y given the parameters \(\theta\).

If you want to sample independent values from this distribution, e.g. calculate outputs using the network, we get \( P(y_0, y_1, ... | \theta) = \prod_i P(y_i|\theta)\).

In a neural network the \(\theta\) is represented by the weights. Improving the weights improves the model. This is called the Likelihood: \(L(y_0, y_1, .. | \theta) = P(y_0, y_1, .. | \theta)\).

Thus, the goal is to find the best parameters (weights) to achieve the best Likelihood:

Combine the equations and you receive:

Since multiplications are difficult to use, we rewrite the function to use sums:

Training a neural network means increasing the maximum log-likelihood!

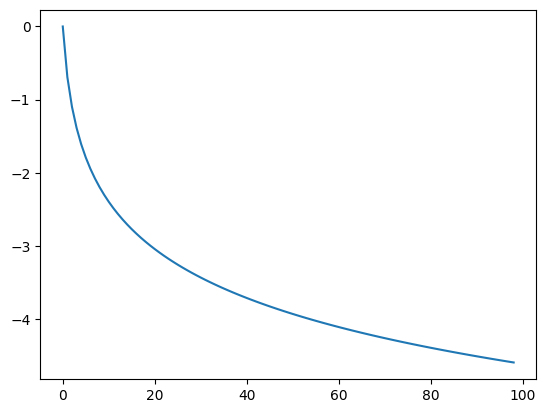

Negative Log-Likelihood#

If increasing the maximum log-likelihood is improving the network, so is decreasing the negative log-likelihood:

Usually, this is called the loss function. It is calculated, by summing the logarithms over the correct classes:

Example#

Softmax:

Let’s assume our network has three classes: Boat, Dog and cat.

The network has 3 output neurons and calculates for an input image the following:

If we apply softmax to the output of the network, we get:

where \(\sum S(y) = 1\)

x = range(1,100)

plt.plot(-np.log(x))

[<matplotlib.lines.Line2D at 0x7f9ad0d20b10>]

If in our example above our random sample is a boat, we get:

If it would be a dog, we get:

Wrong prediction have a high error. Thus if we minimize this function, we gradually improve our model.